NeuralNeworkRuntime

Overview

Provides APIs for accelerating the Neural Network Runtime (NNRt) model inference.

Since: 9

System capability: @Syscap SystemCapability.Ai.NeuralNetworkRuntime

Summary

File

| Name | Description |

|---|---|

| neural_network_core.h | Defines APIs for the Neural Network Core module. The AI inference framework uses the native interfaces provided by Neural Network Core to build models and perform inference and computing on acceleration device. |

| neural_network_runtime.h | Defines APIs for NNRt. The AI inference framework uses the native APIs provided by the NNRt to construct and build models. |

| neural_network_runtime_type.h | Defines the structure and enums for NNRt. |

Structs

| Name | Description |

|---|---|

| OH_NN_UInt32Array | Used to store a 32-bit unsigned integer array. |

| OH_NN_QuantParam | Used to define the quantization information. |

| OH_NN_Tensor | Used to define the tensor structure. |

| OH_NN_Memory | Used to define the memory structure. |

Types

| Name | Description |

|---|---|

| OH_NNModel | Model handle. |

| OH_NNCompilation | Compiler handle. |

| OH_NNExecutor | Executor handle. |

| NN_QuantParam | Quantization parameter handle. |

| NN_TensorDesc | Tensor description handle. |

| NN_Tensor | Tensor handle. |

| (*NN_OnRunDone) (void *userData, OH_NN_ReturnCode errCode, void *outputTensor[], int32_t outputCount) | Handle of the callback processing function invoked when the asynchronous inference ends. |

| (*NN_OnServiceDied) (void *userData) | Handle of the callback processing function invoked when the device driver service terminates unexpectedly during asynchronous inference. |

| OH_NN_UInt32Array | Used to store a 32-bit unsigned integer array. |

| OH_NN_QuantParam | Used to define the quantization information. |

| OH_NN_Tensor | Used to define the tensor structure. |

| OH_NN_Memory | Used to define the memory structure. |

Enums

| Name | Description |

|---|---|

| OH_NN_PerformanceMode { OH_NN_PERFORMANCE_NONE = 0, OH_NN_PERFORMANCE_LOW = 1, OH_NN_PERFORMANCE_MEDIUM = 2, OH_NN_PERFORMANCE_HIGH = 3, OH_NN_PERFORMANCE_EXTREME = 4 } |

Performance modes of the device. |

| OH_NN_Priority { OH_NN_PRIORITY_NONE = 0, OH_NN_PRIORITY_LOW = 1, OH_NN_PRIORITY_MEDIUM = 2, OH_NN_PRIORITY_HIGH = 3 } |

Priorities of a model inference task. |

| OH_NN_ReturnCode { OH_NN_SUCCESS = 0, OH_NN_FAILED = 1, OH_NN_INVALID_PARAMETER = 2, OH_NN_MEMORY_ERROR = 3,OH_NN_OPERATION_FORBIDDEN = 4, OH_NN_NULL_PTR = 5, OH_NN_INVALID_FILE = 6, OH_NN_UNAVALIDABLE_DEVICE = 7, OH_NN_INVALID_PATH = 8, OH_NN_TIMEOUT = 9, OH_NN_UNSUPPORTED = 10, OH_NN_CONNECTION_EXCEPTION = 11,OH_NN_SAVE_CACHE_EXCEPTION = 12, OH_NN_DYNAMIC_SHAPE = 13, OH_NN_UNAVAILABLE_DEVICE = 14 } |

Error codes for NNRt. |

| OH_NN_FuseType : int8_t { OH_NN_FUSED_NONE = 0, OH_NN_FUSED_RELU = 1, OH_NN_FUSED_RELU6 = 2 } |

Activation function types in the fusion operator for NNRt. |

| OH_NN_Format { OH_NN_FORMAT_NONE = 0, OH_NN_FORMAT_NCHW = 1, OH_NN_FORMAT_NHWC = 2, OH_NN_FORMAT_ND = 3 } |

Formats of tensor data. |

| OH_NN_DeviceType { OH_NN_OTHERS = 0, OH_NN_CPU = 1, OH_NN_GPU = 2, OH_NN_ACCELERATOR = 3 } |

Device types supported by NNRt. |

| OH_NN_DataType { OH_NN_UNKNOWN = 0, OH_NN_BOOL = 1, OH_NN_INT8 = 2, OH_NN_INT16 = 3,OH_NN_INT32 = 4, OH_NN_INT64 = 5, OH_NN_UINT8 = 6, OH_NN_UINT16 = 7,OH_NN_UINT32 = 8, OH_NN_UINT64 = 9, OH_NN_FLOAT16 = 10, OH_NN_FLOAT32 = 11,OH_NN_FLOAT64 = 12 } |

Data types supported by NNRt. |

| OH_NN_OperationType { OH_NN_OPS_ADD = 1, OH_NN_OPS_AVG_POOL = 2, OH_NN_OPS_BATCH_NORM = 3, OH_NN_OPS_BATCH_TO_SPACE_ND = 4, OH_NN_OPS_BIAS_ADD = 5, OH_NN_OPS_CAST = 6, OH_NN_OPS_CONCAT = 7, OH_NN_OPS_CONV2D = 8, OH_NN_OPS_CONV2D_TRANSPOSE = 9, OH_NN_OPS_DEPTHWISE_CONV2D_NATIVE = 10, OH_NN_OPS_DIV = 11, OH_NN_OPS_ELTWISE = 12, OH_NN_OPS_EXPAND_DIMS = 13, OH_NN_OPS_FILL = 14, OH_NN_OPS_FULL_CONNECTION = 15, OH_NN_OPS_GATHER = 16,OH_NN_OPS_HSWISH = 17, OH_NN_OPS_LESS_EQUAL = 18, OH_NN_OPS_MATMUL = 19, OH_NN_OPS_MAXIMUM = 20, OH_NN_OPS_MAX_POOL = 21, OH_NN_OPS_MUL = 22, OH_NN_OPS_ONE_HOT = 23, OH_NN_OPS_PAD = 24, OH_NN_OPS_POW = 25, OH_NN_OPS_SCALE = 26, OH_NN_OPS_SHAPE = 27, OH_NN_OPS_SIGMOID = 28, OH_NN_OPS_SLICE = 29, OH_NN_OPS_SOFTMAX = 30, OH_NN_OPS_SPACE_TO_BATCH_ND = 31, OH_NN_OPS_SPLIT = 32,OH_NN_OPS_SQRT = 33, OH_NN_OPS_SQUARED_DIFFERENCE = 34, OH_NN_OPS_SQUEEZE = 35, OH_NN_OPS_STACK = 36, OH_NN_OPS_STRIDED_SLICE = 37, OH_NN_OPS_SUB = 38, OH_NN_OPS_TANH = 39, OH_NN_OPS_TILE = 40, OH_NN_OPS_TRANSPOSE = 41, OH_NN_OPS_REDUCE_MEAN = 42, OH_NN_OPS_RESIZE_BILINEAR = 43, OH_NN_OPS_RSQRT = 44,OH_NN_OPS_RESHAPE = 45, OH_NN_OPS_PRELU = 46, OH_NN_OPS_RELU = 47, OH_NN_OPS_RELU6 = 48, OH_NN_OPS_LAYER_NORM = 49, OH_NN_OPS_REDUCE_PROD = 50, OH_NN_OPS_REDUCE_ALL = 51, OH_NN_OPS_QUANT_DTYPE_CAST = 52, OH_NN_OPS_TOP_K = 53, OH_NN_OPS_ARG_MAX = 54, OH_NN_OPS_UNSQUEEZE = 55, OH_NN_OPS_GELU = 56 } |

Operator types supported by NNRt. |

| OH_NN_TensorType { OH_NN_TENSOR = 0, OH_NN_ADD_ACTIVATIONTYPE = 1, OH_NN_AVG_POOL_KERNEL_SIZE = 2, OH_NN_AVG_POOL_STRIDE = 3, OH_NN_AVG_POOL_PAD_MODE = 4, OH_NN_AVG_POOL_PAD = 5, OH_NN_AVG_POOL_ACTIVATION_TYPE = 6, OH_NN_BATCH_NORM_EPSILON = 7, OH_NN_BATCH_TO_SPACE_ND_BLOCKSIZE = 8, OH_NN_BATCH_TO_SPACE_ND_CROPS = 9, OH_NN_CONCAT_AXIS = 10, OH_NN_CONV2D_STRIDES = 11, OH_NN_CONV2D_PAD = 12, OH_NN_CONV2D_DILATION = 13, OH_NN_CONV2D_PAD_MODE = 14, OH_NN_CONV2D_ACTIVATION_TYPE = 15, OH_NN_CONV2D_GROUP = 16, OH_NN_CONV2D_TRANSPOSE_STRIDES = 17, OH_NN_CONV2D_TRANSPOSE_PAD = 18, OH_NN_CONV2D_TRANSPOSE_DILATION = 19, OH_NN_CONV2D_TRANSPOSE_OUTPUT_PADDINGS = 20, OH_NN_CONV2D_TRANSPOSE_PAD_MODE = 21, OH_NN_CONV2D_TRANSPOSE_ACTIVATION_TYPE = 22, OH_NN_CONV2D_TRANSPOSE_GROUP = 23, OH_NN_DEPTHWISE_CONV2D_NATIVE_STRIDES = 24, OH_NN_DEPTHWISE_CONV2D_NATIVE_PAD = 25, OH_NN_DEPTHWISE_CONV2D_NATIVE_DILATION = 26, OH_NN_DEPTHWISE_CONV2D_NATIVE_PAD_MODE = 27, OH_NN_DEPTHWISE_CONV2D_NATIVE_ACTIVATION_TYPE = 28, OH_NN_DIV_ACTIVATIONTYPE = 29, OH_NN_ELTWISE_MODE = 30, OH_NN_FULL_CONNECTION_AXIS = 31, OH_NN_FULL_CONNECTION_ACTIVATIONTYPE = 32, OH_NN_MATMUL_TRANSPOSE_A = 33, OH_NN_MATMUL_TRANSPOSE_B = 34, OH_NN_MATMUL_ACTIVATION_TYPE = 35, OH_NN_MAX_POOL_KERNEL_SIZE = 36, OH_NN_MAX_POOL_STRIDE = 37, OH_NN_MAX_POOL_PAD_MODE = 38, OH_NN_MAX_POOL_PAD = 39,OH_NN_MAX_POOL_ACTIVATION_TYPE = 40, OH_NN_MUL_ACTIVATION_TYPE = 41, OH_NN_ONE_HOT_AXIS = 42, OH_NN_PAD_CONSTANT_VALUE = 43, OH_NN_SCALE_ACTIVATIONTYPE = 44, OH_NN_SCALE_AXIS = 45, OH_NN_SOFTMAX_AXIS = 46, OH_NN_SPACE_TO_BATCH_ND_BLOCK_SHAPE = 47, OH_NN_SPACE_TO_BATCH_ND_PADDINGS = 48, OH_NN_SPLIT_AXIS = 49, OH_NN_SPLIT_OUTPUT_NUM = 50, OH_NN_SPLIT_SIZE_SPLITS = 51, OH_NN_SQUEEZE_AXIS = 52, OH_NN_STACK_AXIS = 53, OH_NN_STRIDED_SLICE_BEGIN_MASK = 54, OH_NN_STRIDED_SLICE_END_MASK = 55, OH_NN_STRIDED_SLICE_ELLIPSIS_MASK = 56, OH_NN_STRIDED_SLICE_NEW_AXIS_MASK = 57, OH_NN_STRIDED_SLICE_SHRINK_AXIS_MASK = 58, OH_NN_SUB_ACTIVATIONTYPE = 59, OH_NN_REDUCE_MEAN_KEEP_DIMS = 60, OH_NN_RESIZE_BILINEAR_NEW_HEIGHT = 61, OH_NN_RESIZE_BILINEAR_NEW_WIDTH = 62, OH_NN_RESIZE_BILINEAR_PRESERVE_ASPECT_RATIO = 63, OH_NN_RESIZE_BILINEAR_COORDINATE_TRANSFORM_MODE = 64, OH_NN_RESIZE_BILINEAR_EXCLUDE_OUTSIDE = 65, OH_NN_LAYER_NORM_BEGIN_NORM_AXIS = 66, OH_NN_LAYER_NORM_EPSILON = 67, OH_NN_LAYER_NORM_BEGIN_PARAM_AXIS = 68, OH_NN_LAYER_NORM_ELEMENTWISE_AFFINE = 69, OH_NN_REDUCE_PROD_KEEP_DIMS = 70, OH_NN_REDUCE_ALL_KEEP_DIMS = 71, OH_NN_QUANT_DTYPE_CAST_SRC_T = 72, OH_NN_QUANT_DTYPE_CAST_DST_T = 73, OH_NN_TOP_K_SORTED = 74, OH_NN_ARG_MAX_AXIS = 75, OH_NN_ARG_MAX_KEEPDIMS = 76, OH_NN_UNSQUEEZE_AXIS = 77 } |

Tensor types. |

Functions

| Name | Description |

|---|---|

| *OH_NNCompilation_Construct (const OH_NNModel *model) | Creates a model building instance of the OH_NNCompilation type. |

| *OH_NNCompilation_ConstructWithOfflineModelFile (const char *modelPath) | Creates a model building instance based on an offline model file. |

| *OH_NNCompilation_ConstructWithOfflineModelBuffer (const void *modelBuffer, size_t modelSize) | Creates a model building instance based on the offline model buffer. |

| *OH_NNCompilation_ConstructForCache () | Creates an empty model building instance for later recovery from the model cache. |

| OH_NNCompilation_ExportCacheToBuffer (OH_NNCompilation *compilation, const void *buffer, size_t length, size_t *modelSize) | Writes the model cache to the specified buffer. |

| OH_NNCompilation_ImportCacheFromBuffer (OH_NNCompilation *compilation, const void *buffer, size_t modelSize) | Reads the model cache from the specified buffer. |

| OH_NNCompilation_AddExtensionConfig (OH_NNCompilation *compilation, const char *configName, const void *configValue, const size_t configValueSize) | Adds extended configurations for custom device attributes. |

| OH_NNCompilation_SetDevice (OH_NNCompilation *compilation, size_t deviceID) | Sets the device for model building and computing. |

| OH_NNCompilation_SetCache (OH_NNCompilation *compilation, const char *cachePath, uint32_t version) | Sets the cache directory and version for model building. |

| OH_NNCompilation_SetPerformanceMode (OH_NNCompilation *compilation, OH_NN_PerformanceMode performanceMode) | Sets the performance mode for model computing. |

| OH_NNCompilation_SetPriority (OH_NNCompilation *compilation, OH_NN_Priority priority) | Sets the priority for model computing. |

| OH_NNCompilation_EnableFloat16 (OH_NNCompilation *compilation, bool enableFloat16) | Enables float16 for computing. |

| OH_NNCompilation_Build (OH_NNCompilation *compilation) | Performs model building. |

| OH_NNCompilation_Destroy (OH_NNCompilation **compilation) | Destroys a model building instance of the OH_NNCompilation type. |

| *OH_NNTensorDesc_Create () | Creates an NN_TensorDesc instance. |

| OH_NNTensorDesc_Destroy (NN_TensorDesc **tensorDesc) | Releases an NN_TensorDesc instance. |

| OH_NNTensorDesc_SetName (NN_TensorDesc *tensorDesc, const char *name) | Sets the name of an NN_TensorDesc instance. |

| OH_NNTensorDesc_GetName (const NN_TensorDesc *tensorDesc, const char **name) | Obtains the name of an NN_TensorDesc instance. |

| OH_NNTensorDesc_SetDataType (NN_TensorDesc *tensorDesc, OH_NN_DataType dataType) | Sets the data type of an NN_TensorDesc instance. |

| OH_NNTensorDesc_GetDataType (const NN_TensorDesc *tensorDesc, OH_NN_DataType *dataType) | Obtains the data type of an NN_TensorDesc instance. |

| OH_NNTensorDesc_SetShape (NN_TensorDesc *tensorDesc, const int32_t *shape, size_t shapeLength) | Sets the data shape of an NN_TensorDesc instance. |

| OH_NNTensorDesc_GetShape (const NN_TensorDesc *tensorDesc, int32_t **shape, size_t *shapeLength) | Obtains the shape of an NN_TensorDesc instance. |

| OH_NNTensorDesc_SetFormat (NN_TensorDesc *tensorDesc, OH_NN_Format format) | Sets the data format of an NN_TensorDesc instance. |

| OH_NNTensorDesc_GetFormat (const NN_TensorDesc *tensorDesc, OH_NN_Format *format) | Obtains the data format of an NN_TensorDesc instance. |

| OH_NNTensorDesc_GetElementCount (const NN_TensorDesc *tensorDesc, size_t *elementCount) | Obtains the number of elements in an NN_TensorDesc instance. |

| OH_NNTensorDesc_GetByteSize (const NN_TensorDesc *tensorDesc, size_t *byteSize) | Obtains the number of bytes occupied by the tensor data obtained through calculation based on the shape and data type of an NN_TensorDesc instance. |

| *OH_NNTensor_Create (size_t deviceID, NN_TensorDesc *tensorDesc) | Creates an NN_Tensor instance from NN_TensorDesc. |

| *OH_NNTensor_CreateWithSize (size_t deviceID, NN_TensorDesc *tensorDesc, size_t size) | Creates an NN_Tensor instance based on the specified memory size and NN_TensorDesc instance. |

| *OH_NNTensor_CreateWithFd (size_t deviceID, NN_TensorDesc *tensorDesc, int fd, size_t size, size_t offset) | Creates an {@Link NN_Tensor} instance based on the specified file descriptor of the shared memory and NN_TensorDesc instance. |

| OH_NNTensor_Destroy (NN_Tensor **tensor) | Destroys an NN_Tensor instance. |

| *OH_NNTensor_GetTensorDesc (const NN_Tensor *tensor) | Obtains an NN_TensorDesc instance of NN_Tensor. |

| *OH_NNTensor_GetDataBuffer (const NN_Tensor *tensor) | Obtains the memory address of NN_Tensor data. |

| OH_NNTensor_GetFd (const NN_Tensor *tensor, int *fd) | Obtains the file descriptor of the shared memory where NN_Tensor data is stored. |

| OH_NNTensor_GetSize (const NN_Tensor *tensor, size_t *size) | Obtains the size of the shared memory where the NN_Tensor data is stored. |

| OH_NNTensor_GetOffset (const NN_Tensor *tensor, size_t *offset) | Obtains the offset of NN_Tensor data in the shared memory. |

| *OH_NNExecutor_Construct (OH_NNCompilation *compilation) | Creates an OH_NNExecutor instance. |

| OH_NNExecutor_GetOutputShape (OH_NNExecutor *executor, uint32_t outputIndex, int32_t **shape, uint32_t *shapeLength) | Obtains the dimension information about the output tensor. |

| OH_NNExecutor_Destroy (OH_NNExecutor **executor) | Destroys an executor instance to release the memory occupied by it. |

| OH_NNExecutor_GetInputCount (const OH_NNExecutor *executor, size_t *inputCount) | Obtains the number of input tensors. |

| OH_NNExecutor_GetOutputCount (const OH_NNExecutor *executor, size_t *outputCount) | Obtains the number of output tensors. |

| *OH_NNExecutor_CreateInputTensorDesc (const OH_NNExecutor *executor, size_t index) | Creates the description of an input tensor based on the specified index value. |

| *OH_NNExecutor_CreateOutputTensorDesc (const OH_NNExecutor *executor, size_t index) | Creates the description of an output tensor based on the specified index value. |

| OH_NNExecutor_GetInputDimRange (const OH_NNExecutor *executor, size_t index, size_t **minInputDims, size_t **maxInputDims, size_t *shapeLength) | Obtains the dimension range of all input tensors. |

| OH_NNExecutor_SetOnRunDone (OH_NNExecutor *executor, NN_OnRunDone onRunDone) | Sets the callback processing function invoked when the asynchronous inference ends. |

| OH_NNExecutor_SetOnServiceDied (OH_NNExecutor *executor, NN_OnServiceDied onServiceDied) | Sets the callback processing function invoked when the device driver service terminates unexpectedly during asynchronous inference. |

| OH_NNExecutor_RunSync (OH_NNExecutor *executor, NN_Tensor *inputTensor[], size_t inputCount, NN_Tensor *outputTensor[], size_t outputCount) | Performs synchronous inference. |

| OH_NNExecutor_RunAsync (OH_NNExecutor *executor, NN_Tensor *inputTensor[], size_t inputCount, NN_Tensor *outputTensor[], size_t outputCount, int32_t timeout, void *userData) | Performs asynchronous inference. |

| OH_NNDevice_GetAllDevicesID (const size_t **allDevicesID, uint32_t *deviceCount) | Obtains the ID of the device connected to NNRt. |

| OH_NNDevice_GetName (size_t deviceID, const char **name) | Obtains the name of the specified device. |

| OH_NNDevice_GetType (size_t deviceID, OH_NN_DeviceType *deviceType) | Obtains the type of the specified device. |

| *OH_NNQuantParam_Create () | Creates an NN_QuantParam instance. |

| OH_NNQuantParam_SetScales (NN_QuantParam *quantParams, const double *scales, size_t quantCount) | Sets the scaling coefficient for an NN_QuantParam instance. |

| OH_NNQuantParam_SetZeroPoints (NN_QuantParam *quantParams, const int32_t *zeroPoints, size_t quantCount) | Sets the zero point for an NN_QuantParam instance. |

| OH_NNQuantParam_SetNumBits (NN_QuantParam *quantParams, const uint32_t *numBits, size_t quantCount) | Sets the number of quantization bits for an NN_QuantParam instance. |

| OH_NNQuantParam_Destroy (NN_QuantParam **quantParams) | Destroys an NN_QuantParam instance. |

| *OH_NNModel_Construct (void) | Creates a model instance of the OH_NNModel type and constructs a model instance by using the APIs provided by OH_NNModel. |

| OH_NNModel_AddTensorToModel (OH_NNModel *model, const NN_TensorDesc *tensorDesc) | Adds a tensor to a model instance. |

| OH_NNModel_SetTensorData (OH_NNModel *model, uint32_t index, const void *dataBuffer, size_t length) | Sets the tensor value. |

| OH_NNModel_SetTensorQuantParams (OH_NNModel *model, uint32_t index, NN_QuantParam *quantParam) | Sets the quantization parameters of a tensor. For details, see NN_QuantParam. |

| OH_NNModel_SetTensorType (OH_NNModel *model, uint32_t index, OH_NN_TensorType tensorType) | Sets the tensor type. For details, see OH_NN_TensorType. |

| OH_NNModel_AddOperation (OH_NNModel *model, OH_NN_OperationType op, const OH_NN_UInt32Array *paramIndices, const OH_NN_UInt32Array *inputIndices, const OH_NN_UInt32Array *outputIndices) | Adds an operator to a model instance. |

| OH_NNModel_SpecifyInputsAndOutputs (OH_NNModel *model, const OH_NN_UInt32Array *inputIndices, const OH_NN_UInt32Array *outputIndices) | Sets an index value for the input and output tensors of a model. |

| OH_NNModel_Finish (OH_NNModel *model) | Completes model composition. |

| OH_NNModel_Destroy (OH_NNModel **model) | Destroys a model instance. |

| OH_NNModel_GetAvailableOperations (OH_NNModel *model, size_t deviceID, const bool **isSupported, uint32_t *opCount) | Checks whether all operators in a model are supported by the device. The result is indicated by a Boolean value. |

| OH_NNModel_AddTensor (OH_NNModel *model, const OH_NN_Tensor *tensor) | Adds a tensor to a model instance. |

| OH_NNExecutor_SetInput (OH_NNExecutor *executor, uint32_t inputIndex, const OH_NN_Tensor *tensor, const void *dataBuffer, size_t length) | Sets the data for a single model input. |

| OH_NNExecutor_SetOutput (OH_NNExecutor *executor, uint32_t outputIndex, void *dataBuffer, size_t length) | Sets the memory for a single model output. |

| OH_NNExecutor_Run (OH_NNExecutor *executor) | Executes model inference. |

| *OH_NNExecutor_AllocateInputMemory (OH_NNExecutor *executor, uint32_t inputIndex, size_t length) | Applies for shared memory for a single model input on the device. |

| *OH_NNExecutor_AllocateOutputMemory (OH_NNExecutor *executor, uint32_t outputIndex, size_t length) | Applies for shared memory for a single model output on the device. |

| OH_NNExecutor_DestroyInputMemory (OH_NNExecutor *executor, uint32_t inputIndex, OH_NN_Memory **memory) | Releases the input memory pointed by the OH_NN_Memory instance. |

| OH_NNExecutor_DestroyOutputMemory (OH_NNExecutor *executor, uint32_t outputIndex, OH_NN_Memory **memory) | Releases the output memory pointed by the OH_NN_Memory instance. |

| OH_NNExecutor_SetInputWithMemory (OH_NNExecutor *executor, uint32_t inputIndex, const OH_NN_Tensor *tensor, const OH_NN_Memory *memory) | Shared memory pointed by the OH_NN_Memory instance for a single model input. |

| OH_NNExecutor_SetOutputWithMemory (OH_NNExecutor *executor, uint32_t outputIndex, const OH_NN_Memory *memory) | Shared memory pointed by the OH_NN_Memory instance for a single model output. |

Type Description

NN_OnRunDone

typedef void(*NN_OnRunDone) (void *userData, OH_NN_ReturnCode errCode, void *outputTensor[], int32_t outputCount)

Description

Handle of the callback processing function invoked when the asynchronous inference ends.

Use the userData parameter to specify the asynchronous inference to query. The value of userData is the same as that passed to OH_NNExecutor_RunAsync. Use the errCode parameter to obtain the return result (defined by OH_NN_ReturnCode of the asynchronous inference.

Since: 11

Parameters

| Name | Description |

|---|---|

| userData | Identifier of asynchronous inference. The value is the same as the userData parameter passed to OH_NNExecutor_RunAsync. |

| errCode | Return result (defined by OH_NN_ReturnCode of the asynchronous inference. |

| outputTensor | Output tensor for asynchronous inference. The value is the same as the outputTensor parameter passed to OH_NNExecutor_RunAsync. |

| outputCount | Number of output tensors for asynchronous inference. The value is the same as the outputCount parameter passed to OH_NNExecutor_RunAsync. |

NN_OnServiceDied

typedef void(*NN_OnServiceDied) (void *userData)

Description

Handle of the callback processing function invoked when the device driver service terminates unexpectedly during asynchronous inference.

You need to rebuild the model if the callback is invoked.

You can use the userData parameter to specify the asynchronous inference to query. The value of userData is the same as that passed to OH_NNExecutor_RunAsync.

Since: 11

Parameters

| Name | Description |

|---|---|

| userData | Identifier of asynchronous inference. The value is the same as the userData parameter passed to OH_NNExecutor_RunAsync. |

NN_QuantParam

typedef struct NN_QuantParam NN_QuantParam

Description

Quantization parameter handle.

Since: 11

NN_Tensor

typedef struct NN_Tensor NN_Tensor

Description

Tensor handle.

Since: 11

NN_TensorDesc

typedef struct NN_TensorDesc NN_TensorDesc

Description

Tensor description handle.

Since: 11

OH_NN_Memory(deprecated)

typedef struct OH_NN_Memory OH_NN_Memory

Description

Used to define the memory structure.

Since: 9

Deprecated: This API is deprecated since API version 11.

Substitute: You are advised to use NN_Tensor.

OH_NN_QuantParam(deprecated)

typedef struct OH_NN_QuantParam OH_NN_QuantParam

Description

Used to define the quantization information.

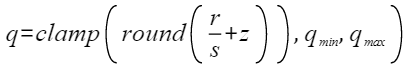

In quantization scenarios, the 32-bit floating-point data type is quantized into the fixed-point data type according to the following formula:

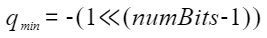

where, s and z are quantization parameters, which are stored by scale and zeroPoint in OH_NN_QuanParam. r is a floating point number, q is the quantization result, q_min is the lower bound of the quantization result, and q_max is the upper bound of the quantization result. The calculation method is as follows:

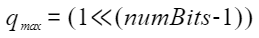

The clamp function is defined as follows:

Since: 9

Deprecated: This API is deprecated since API version 11.

Substitute: You are advised to use NN_QuantParam.

OH_NN_Tensor(deprecated)

typedef struct OH_NN_Tensor OH_NN_Tensor

Description

Used to define the tensor structure.

It is usually used to construct data nodes and operator parameters in a model diagram. When constructing a tensor, you need to specify the data type, number of dimensions, dimension information, and quantization information.

Since: 9

Deprecated: This API is deprecated since API version 11.

Substitute: You are advised to use NN_TensorDesc.

OH_NN_UInt32Array

typedef struct OH_NN_UInt32Array OH_NN_UInt32Array

Description

Defines the structure for storing 32-bit unsigned integer arrays.

Since: 9

OH_NNCompilation

typedef struct OH_NNCompilation OH_NNCompilation

Description

Compiler handle.

Since: 9

OH_NNExecutor

typedef struct OH_NNExecutor OH_NNExecutor

Description

Executor handle.

Since: 9

OH_NNModel

typedef struct OH_NNModel OH_NNModel

Description

Model handle.

Since: 9

Enum Description

OH_NN_DataType

enum OH_NN_DataType

Description

Data types supported by NNRt.

Since: 9

| Value | Description |

|---|---|

| OH_NN_UNKNOWN | Unknown type. |

| OH_NN_BOOL | bool type. |

| OH_NN_INT8 | int8 type. |

| OH_NN_INT16 | int16 type. |

| OH_NN_INT32 | int32 type. |

| OH_NN_INT64 | int64 type. |

| OH_NN_UINT8 | uint8 type. |

| OH_NN_UINT16 | uint16 type. |

| OH_NN_UINT32 | uint32 type. |

| OH_NN_UINT64 | uint64 type. |

| OH_NN_FLOAT16 | float16 type. |

| OH_NN_FLOAT32 | float32 type. |

| OH_NN_FLOAT64 | float64 type. |

OH_NN_DeviceType

enum OH_NN_DeviceType

Description

Device types supported by NNRt.

Since: 9

| Value | Description |

|---|---|

| OH_NN_OTHERS | Devices that are not CPU, GPU, or dedicated accelerator. |

| OH_NN_CPU | CPU. |

| OH_NN_GPU | GPU. |

| OH_NN_ACCELERATOR | Dedicated device accelerator. |

OH_NN_Format

enum OH_NN_Format

Description

Formats of tensor data.

Since: 9

| Value | Description |

|---|---|

| OH_NN_FORMAT_NONE | The tensor does not have a specific arrangement type (such as scalar or vector). |

| OH_NN_FORMAT_NCHW | The tensor arranges data in NCHW format. |

| OH_NN_FORMAT_NHWC | The tensor arranges data in NHWC format. |

| OH_NN_FORMAT_ND11+ | The tensor arranges data in ND format. This API is supported since API version 11. |

OH_NN_FuseType

enum OH_NN_FuseType : int8_t

Description

Activation function types in the fusion operator for NNRt.

Since: 9

| Value | Description |

|---|---|

| OH_NN_FUSED_NONE | The fusion activation function is not specified. |

| OH_NN_FUSED_RELU | Fusion relu activation function. |

| OH_NN_FUSED_RELU6 | Fusion relu6 activation function. |

OH_NN_OperationType

enum OH_NN_OperationType

Description

Operator types supported by NNRt.

Since: 9

| Value | Description |

|---|---|

| OH_NN_OPS_ADD | Returns the tensor of the sum of the elements corresponding to two input tensors. Input: - input1: first input tensor, which is of the Boolean or number type. - input2: second input tensor, whose data type must be the same as that of the first tensor. Parameters: - activationType: integer constant contained in FuseType. The specified activation function is called before output. Output: output: sum of input1 and input2. The data shape is the same as that of the input after broadcasting, and the data type is the same as that of the input with a higher precision. |

| OH_NN_OPS_AVG_POOL | Applies 2D average pooling to the input tensor, which must be in the NHWC format. The int8 quantization input is supported. If the input contains the padMode parameter: Input: - input: a tensor. Parameters: - kernelSize: average kernel size. It is an int array whose length is 2. It is in the format of [kernel_height, kernel_weight], where the first number indicates the kernel height, and the second number indicates the kernel width. - strides: kernel moving stride. It is an int array whose length is 2. It is in the format of [stride_height, stride_weight], where the first number indicates the moving stride in height, and the second number indicates the moving stride in width. - padMode: padding mode, which is optional. It is an int value, which can be 0 (same) or 1 (valid). The nearest neighbor value is used for padding. 0 (same): The height and width of the output are the same as those of the input. The total padding quantity is calculated horizontally and vertically and evenly distributed to the top, bottom, left, and right if possible. Otherwise, the last additional padding will be completed from the bottom and right. 1 (valid): The possible maximum height and width of the output will be returned in case of no padding. The excessive pixels will be discarded. - activationType: integer constant contained in FuseType. The specified activation function is called before output. If the input contains the padList parameter: Input: - input: a tensor. Parameters: - kernelSize: average kernel size. It is an int array whose length is 2. It is in the format of [kernel_height, kernel_weight], where the first number indicates the kernel height, and the second number indicates the kernel width. - strides: kernel moving stride. It is an int array whose length is 2. It is in the format of [stride_height, stride_weight], where the first number indicates the moving stride in height, and the second number indicates the moving stride in width. padList: padding around input. It is an int array in the format of [top, bottom, left, right], and the nearest neighbor values are used for padding. - activationType: integer constant contained in FuseType. The specified activation function is called before output. Output: output: average pooling result of the input. |

| OH_NN_OPS_BATCH_NORM | Performs batch normalization on a tensor to scale and shift tensor elements, relieving potential covariate shift in a batch of data. Input: - input: n-dimensional tensor in the shape of [N, ..., C]. The nth dimension is the number of channels. - scale: 1D tensor of the scaling factor used to scale the first normalized tensor. - offset: 1D tensor used to move to the first normalized tensor. - mean: 1D tensor of the overall mean value. It is used only for inference. In case of training, this parameter must be left empty. - variance: 1D tensor used for the overall variance. It is used only for inference. In case of training, this parameter must be left empty. Parameters: epsilon: fixed small additional value. Output: output: n-dimensional output tensor whose shape and data type are the same as those of the input. |

| OH_NN_OPS_BATCH_TO_SPACE_ND | Divides batch dimension of a 4D tensor into small blocks by block_shape and interleaves them into the spatial dimension. Parameters: - input: input tensor. The dimension will be divided into small blocks, and these blocks will be interleaved into the spatial dimension. Output: blockSize: size of blocks to be interleaved into the spatial dimension. The value is an array in the format of [height_block, weight_block]. crops: elements truncated from the spatial dimension of the output. The value is a 2D array in the format of [[crop0_start, crop0_end], [crop1_start, crop1_end]] with the shape of (2, 2). Output: - output. If the shape of input is (n,h,w,c), the shape of output is (n',h',w',c'), where n' = n / (block_shape[0] * block_shape[1]), h' = h * block_shape[0] - crops[0][0] - crops[0][1], w' = w * block_shape[1] - crops[1][0] - crops[1][1], and c'= c. |

| OH_NN_OPS_BIAS_ADD | Offsets the data in each dimension of the input tensor. Input: input: input tensor, which can have two to five dimensions. bias: offset of the number of input dimensions. Output: output: sum of the input tensor and the bias in each dimension. |

| OH_NN_OPS_CAST | Converts the data type in the input tensor. Input: - input: input tensor. - type: converted data type. Output: output: converted tensor. |

| OH_NN_OPS_CONCAT | Connects tensors in a specified dimension. Input: - input: n input tensors. Parameters: - axis: dimension for connecting tensors. Output: output: result of connecting n tensors along the axis. |

| OH_NN_OPS_CONV2D | Sets a 2D convolutional layer. If the input contains the padMode parameter: Input: - input: input tensor. - weight: convolution weight in the format of [outChannel, kernelHeight, kernelWidth, inChannel/group]. The value of inChannel must be exactly divided by the value of group. - bias: bias of the convolution. It is an array with a length of [outChannel]. In quantization scenarios, quantization parameters are not required for bias. You only need to input data of the OH_NN_INT32 type. The actual quantization parameters are determined by input and weight. Parameters: - stride: moving stride of the convolution kernel in height and weight. It is an int array in the format of [strideHeight, strideWidth]. - dilation: dilation size of the convolution kernel in height and weight. It is an int array in the format of [dilationHeight, dilationWidth]. The value must be greater than or equal to 1 and cannot exceed the height and width of input. - padMode: padding mode of input. The value is of the int type and can be 0 (same) or 1 (valid). 0 (same): The height and width of the output are the same as those of the input. The total padding quantity is calculated horizontally and vertically and evenly distributed to the top, bottom, left, and right if possible. Otherwise, the last additional padding will be completed from the bottom and right. 1 (valid): The possible maximum height and width of the output will be returned in case of no padding. The excessive pixels will be discarded. - group: number of groups in which the input is divided by in_channel. The value is of the int type. If group is 1, it is a conventional convolution. If group is greater than 1 and less than or equal to in_channel, it is a group convolution. - activationType: integer constant contained in FuseType. The specified activation function is called before output. If the input contains the padList parameter: Input: - input: input tensor. - weight: convolution weight in the format of [outChannel, kernelHeight, kernelWidth, inChannel/group]. The value of inChannel must be exactly divided by the value of group. - bias: bias of the convolution. It is an array with a length of [outChannel]. In quantization scenarios, quantization parameters are not required for bias. You only need to input data of the OH_NN_INT32 type. The actual quantization parameters are determined by input and weight. Parameters: - stride: moving stride of the convolution kernel in height and weight. It is an int array in the format of [strideHeight, strideWidth]. - dilation: dilation size of the convolution kernel in height and weight. It is an int array in the format of [dilationHeight, dilationWidth]. The value must be greater than or equal to 1 and cannot exceed the height and width of input. - padList: padding around input. It is an int array in the format of [top, bottom, left, right]. - group: number of groups in which the input is divided by in_channel. The value is of the int type. If group is 1, it is a conventional convolution. If group is in_channel, it is depthwiseConv2d. In this case, group==in_channel==out_channel. If group is greater than 1 and less than in_channel, it is a group convolution. In this case, out_channel==group. - activationType: integer constant contained in FuseType. The specified activation function is called before output. Output: - output: convolution computing result. |

| OH_NN_OPS_CONV2D_TRANSPOSE | Sets 2D convolution transposition. If the input contains the padMode parameter: Input: - input: input tensor. - weight: convolution weight in the format of [outChannel, kernelHeight, kernelWidth, inChannel/group]. The value of inChannel must be exactly divided by the value of group. - bias: bias of the convolution. It is an array with a length of [outChannel]. In quantization scenarios, quantization parameters are not required for bias. You only need to input data of the OH_NN_INT32 type. The actual quantization parameters are determined by input and weight. - stride: moving stride of the convolution kernel in height and weight. It is an int array in the format of [strideHeight, strideWidth]. Parameters: - dilation: dilation size of the convolution kernel in height and weight. It is an int array in the format of [dilationHeight, dilationWidth]. The value must be greater than or equal to 1 and cannot exceed the height and width of input. - padMode: padding mode of input. The value is of the int type and can be 0 (same) or 1 (valid). 0 (same): The height and width of the output are the same as those of the input. The total padding quantity is calculated horizontally and vertically and evenly distributed to the top, bottom, left, and right if possible. Otherwise, the last additional padding will be completed from the bottom and right. 1 (valid): The possible maximum height and width of the output will be returned in case of no padding. The excessive pixels will be discarded. - group: number of groups in which the input is divided by in_channel. The value is of the int type. If group is 1, it is a conventional convolution. If group is greater than 1 and less than or equal to in_channel, it is a group convolution. - outputPads: padding along the height and width of the output tensor. The value is an int number, a tuple, or a list of two integers. It can be a single integer to specify the same value for all spatial dimensions. The amount of output padding along a dimension must be less than the stride along this dimension. - activationType: integer constant contained in FuseType. The specified activation function is called before output. If the input contains the padList parameter: Input: - input: input tensor. - weight: convolution weight in the format of [outChannel, kernelHeight, kernelWidth, inChannel/group]. The value of inChannel must be exactly divided by the value of group. - bias: bias of the convolution. It is an array with a length of [outChannel]. In quantization scenarios, quantization parameters are not required for bias. You only need to input data of the OH_NN_INT32 type. The actual quantization parameters are determined by input and weight. Parameters: - stride: moving stride of the convolution kernel in height and weight. It is an int array in the format of [strideHeight, strideWidth]. - dilation: dilation size of the convolution kernel in height and weight. It is an int array in the format of [dilationHeight, dilationWidth]. The value must be greater than or equal to 1 and cannot exceed the height and width of input. - padList: padding around input. It is an int array in the format of [top, bottom, left, right]. - group: number of groups in which the input is divided by in_channel. The value is of the int type. If group is 1, it is a conventional convolution. If group is greater than 1 and less than or equal to in_channel, it is a group convolution. - outputPads: padding along the height and width of the output tensor. The value is an int number, a tuple, or a list of two integers. It can be a single integer to specify the same value for all spatial dimensions. The amount of output padding along a dimension must be less than the stride along this dimension. - activationType: integer constant contained in FuseType. The specified activation function is called before output. Output: output: computing result after convolution and transposition. |

| OH_NN_OPS_DEPTHWISE_CONV2D_NATIVE | Sets 2D depthwise separable convolution. If the input contains the padMode parameter: Input: - input: input tensor. - weight: convolution weight in the format of [outChannel, kernelHeight, kernelWidth, 1]. outChannel is equal to channelMultiplier multiplied by inChannel. - bias: bias of the convolution. It is an array with a length of [outChannel]. In quantization scenarios, quantization parameters are not required for bias. You only need to input data of the OH_NN_INT32 type. The actual quantization parameters are determined by input and weight. Parameters: - stride: moving stride of the convolution kernel in height and weight. It is an int array in the format of [strideHeight, strideWidth]. - dilation: dilation size of the convolution kernel in height and weight. It is an int array in the format of [dilationHeight, dilationWidth]. The value must be greater than or equal to 1 and cannot exceed the height and width of input. - padMode: padding mode of input. The value is of the int type and can be 0 (same) or 1 (valid). The value 0 (same) indicates that the height and width of the output are the same as those of the input. The total padding quantity is calculated horizontally and vertically and evenly distributed to the top, bottom, left, and right if possible. The value 1 (valid) indicates that the possible maximum height and width of the output will be returned in case of no padding. The excessive pixels will be discarded. - activationType: integer constant contained in FuseType. The specified activation function is called before output. If the input contains the padList parameter: Input: - input: input tensor. - weight: convolution weight in the format of [outChannel, kernelHeight, kernelWidth, 1]. outChannel is equal to channelMultiplier multiplied by inChannel. - bias: bias of the convolution. It is an array with a length of [outChannel]. In quantization scenarios, quantization parameters are not required for bias. You only need to input data of the OH_NN_INT32 type. The actual quantization parameters are determined by input and weight. Parameters: - stride: moving stride of the convolution kernel in height and weight. It is an int array in the format of [strideHeight, strideWidth]. - dilation: dilation size of the convolution kernel in height and weight. It is an int array in the format of [dilationHeight, dilationWidth]. The value must be greater than or equal to 1 and cannot exceed the height and width of input. - padList: padding around input. It is an int array in the format of [top, bottom, left, right]. - activationType: integer constant contained in FuseType. The specified activation function is called before output. Output: - output: convolution computing result. |

| OH_NN_OPS_DIV | Divides two input scalars or tensors. Input: - input1: first input, which is a number, a bool, or a tensor whose data type is number or Boolean. - input2: second input, which must meet the following requirements: If the first input is a real number or Boolean value, the second input must be a tensor whose data type is real number or Boolean value. Parameters: - activationType: integer constant contained in FuseType. The specified activation function is called before output. Output: - output: result of dividing input1 by input2. |

| OH_NN_OPS_ELTWISE | Sets parameters to perform product (dot product), sum (addition and subtraction), or max (larger value) on the input. Input: - input1: first input tensor. - input2: second input tensor. Parameters: - mode: operation mode. The value is an enumerated value. Output: - output: computing result, which has the same data type and shape of output and input1. |

| OH_NN_OPS_EXPAND_DIMS | Adds an additional dimension to a tensor in the given dimension. Input: - input: input tensor. - axis: index of the dimension to be added. The value is of the int32_t type and must be a constant in the range [-dim-1, dim]. Output: - output: tensor after dimension expansion. |

| OH_NN_OPS_FILL | Creates a tensor of the specified dimensions and fills it with a scalar. Input: - value: scalar used to fill the tensor. - shape: dimensions of the tensor to be created. Output: - output: generated tensor, which has the same data type as value. The tensor shape is specified by the shape parameter. |

| OH_NN_OPS_FULL_CONNECTION | Sets a full connection. The entire input is used as the feature map for feature extraction. Input: - input: full-connection input tensor. - weight: weight tensor for a full connection. - bias: full-connection bias. In quantization scenarios, quantization parameters are not required for bias. You only need to input data of the OH_NN_INT32 type. The actual quantization parameters are determined by input and weight. Parameters: - activationType: integer constant contained in FuseType. The specified activation function is called before output. Output: - output: computed tensor. If the input contains the axis parameter: Input: - input: full-connection input tensor. - weight: weight tensor for a full connection. - bias: full-connection bias. In quantization scenarios, quantization parameters are not required for bias. You only need to input data of the OH_NN_INT32 type. The actual quantization parameters are determined by input and weight. Parameters: - axis: axis in which the full connection is applied. The specified axis and its following axes are converted into a 1D tensor for applying the full connection. - activationType: integer constant contained in FuseType. The specified activation function is called before output. Output: - output: computed tensor. |

| OH_NN_OPS_GATHER | Returns the slice of the input tensor based on the specified index and axis. Input: - input: tensor to be sliced. - inputIndices: indices of the specified input on the axis. The value is an array of the int type and must be in the range [0,input.shape[axis]). - axis: axis on which input is sliced. The value is an array with one element of the int32_t type. Output: - output: sliced tensor. |

| OH_NN_OPS_HSWISH | Calculates the activation value of the input Hswish. Input: - An n-dimensional input tensor. Output: n-dimensional Hswish activation value. The data type is the same as that of shape and input. |

| OH_NN_OPS_LESS_EQUAL | For input1 and input2, calculate the result of input1[i]<=input2[i] for each pair of elements, where i is the index of each element in the input tensor. Input: - input1, which can be a real number, Boolean value, or tensor whose data type is real number or NN_BOOL. - input2, which can be a real number or a Boolean value if input_1 is a tensor and must be a tensor with the data type of real number or NN_BOOL if input_1 is not a tensor. Output: A tensor of the NN_BOOL type. When a quantization model is used, the quantization parameters of the output cannot be omitted. However, values of the quantization parameters do not affect the result. |

| OH_NN_OPS_MATMUL | Calculates the inner product of input1 and input2. Input: - input1: n-dimensional input tensor. - input2: n-dimensional input tensor. Parameters: - TransposeX: Boolean value indicating whether to transpose input1. - TransposeY: Boolean value indicating whether to transpose input2. Output: - output: inner product obtained after calculation. In case of type!=NN_UNKNOWN, the output data type is determined by type. In case of type==NN_UNKNOWN, the output data type depends on the data type converted during computing of inputX and inputY. |

| OH_NN_OPS_MAXIMUM | Calculates the maximum of input1 and input2 element-wise. The inputs of input1 and input2 comply with the implicit type conversion rules to make the data types consistent. The input must be two tensors or one tensor and one scalar. If the input contains two tensors, their data types cannot be both NN_BOOL. Their shapes can be broadcast to the same size. If the input contains one tensor and one scalar, the scalar must be a constant. Input: - input1: n-dimensional input tensor of the real number or NN_BOOL type. - input2: n-dimensional input tensor of the real number or NN_BOOL type. Output: - output: n-dimensional output tensor. The shape and data type of output are the same as those of the two inputs with higher precision or bits. |

| OH_NN_OPS_MAX_POOL | Applies 2D maximum pooling to the input tensor. If the input contains the padMode parameter: Input: - input: a tensor. Parameters: - kernelSize: average kernel size. It is an int array whose length is 2. It is in the format of [kernel_height, kernel_weight], where the first number indicates the kernel height, and the second number indicates the kernel width. - strides: kernel moving stride. It is an int array whose length is 2. It is in the format of [stride_height, stride_weight], where the first number indicates the moving stride in height, and the second number indicates the moving stride in width. - padMode: padding mode, which is optional. It is an int value, which can be 0 (same) or 1 (valid). The nearest neighbor value is used for padding. 0 (same): The height and width of the output are the same as those of the input. The total padding quantity is calculated horizontally and vertically and evenly distributed to the top, bottom, left, and right if possible. Otherwise, the last additional padding will be completed from the bottom and right. 1 (valid): The possible maximum height and width of the output will be returned in case of no padding. The excessive pixels will be discarded. - activationType: integer constant contained in FuseType. The specified activation function is called before output. If the input contains the padList parameter: Input: - input: a tensor. Parameters: - kernelSize: average kernel size. It is an int array whose length is 2. It is in the format of [kernel_height, kernel_weight], where the first number indicates the kernel height, and the second number indicates the kernel width. - strides: kernel moving stride. It is an int array whose length is 2. It is in the format of [stride_height, stride_weight], where the first number indicates the moving stride in height, and the second number indicates the moving stride in width. padList: padding around input. It is an int array in the format of [top, bottom, left, right], and the nearest neighbor values are used for padding. - activationType: integer constant contained in FuseType. The specified activation function is called before output. Output: - output: tensor obtained after maximum pooling is applied to the input. |

| OH_NN_OPS_MUL | Multiplies elements in the same positions of inputX and inputY to obtain the output. If inputX and inputY have different shapes, expand them to the same shape through broadcast and then perform multiplication. Input: - input1: n-dimensional tensor. - input2: n-dimensional tensor. Parameters: - activationType: integer constant contained in FuseType. The specified activation function is called before output. Output: Product of each element of input1 and input2. |

| OH_NN_OPS_ONE_HOT | Generates a one-hot tensor based on the positions specified by indices. The positions specified by indices are determined by on_value, and other positions are determined by off_value. Input: - indices: n-dimensional tensor. Each element in indices determines the position of on_value in each one-hot vector. - depth, an integer scalar that determines the depth of the one-hot vector. The value of depth must be greater than 0. - on_value, a scalar that specifies a valid value in the one-hot vector. - off_value, a scalar that specifies the values of other locations in the one-hot vector except the valid value. Parameters: - axis: integer scalar that specifies the dimension for inserting the one-hot. Assume that the shape of indices is [N, C], and the value of depth is D. When axis is 0, the shape of the output is [D, N, C]. When axis is -1, the shape of the output is [N, C, D]. When axis is 1, the shape of the output is [N, D, C]. Output: - output: (n+1)-dimensional tensor if indices is an n-dimensional tensor. The output shape is determined by indices and axis. |

| OH_NN_OPS_PAD | Pads inputX in the specified dimensions. Input: - inputX: n-dimensional tensor in [BatchSize, ...] format. - paddings: 2D tensor that specifies the length to pad in each dimension. The shape is [n, 2]. For example, paddings[i][0] indicates the number of paddings to be added preceding inputX in the nth dimension, and paddings[i][1] indicates the number of paddings to be added following inputX in the nth dimension. Parameters: - padValues: value to be added to the pad operation. The value is a constant with the same data type as inputX. Output: - output: n-dimensional tensor after padding, with the same dimensions and data type as inputX. The shape is determined by inputX and paddings; that is, output.shape[i] = input.shape[i] + paddings[i][0]+paddings[i][1]. |

| OH_NN_OPS_POW | Calculates the y power of each element in input. The input must contain two tensors or one tensor and one scalar. If the input contains two tensors, their data types cannot be both NN_BOOL, and their shapes must be the same. If the input contains one tensor and one scalar, the scalar must be a constant. Input: - input: real number, Boolean value, or tensor whose data type is real number or NN_BOOL. - y: real number, Boolean value, or tensor whose data type is real number or NN_BOOL. Output: - output: tensor, whose shape is determined by the shape of input and y after broadcasting. |

| OH_NN_OPS_SCALE | Scales a tensor. Input: - input: n-dimensional tensor. - scale: scaling tensor. - bias: bias tensor. Parameters: - axis: dimensions to be scaled. - activationType: integer constant contained in FuseType. The specified activation function is called before output. Output: - output: scaled n-dimensional tensor, whose data type is the same as that of input and shape is determined by axis. |

| OH_NN_OPS_SHAPE | Calculates the shape of the input tensor. Input: - input: n-dimensional tensor. Output: - output: integer array representing the dimensions of the input tensor. |

| OH_NN_OPS_SIGMOID | Applies the sigmoid operation to the input tensor. Input: - input: n-dimensional tensor. Output: - output: result of the sigmoid operation. It is an n-dimensional tensor with the same data type and shape as input. |

| OH_NN_OPS_SLICE | Slices a tensor of the specified size from the input tensor in each dimension. Input: - input: n-dimensional input tensor. - begin: start of the slice, which is an array of integers greater than or equal to 0. - size: slice length, which is an array of integers greater than or equal to 1. Assume that a dimension is 1<=size[i]<=input.shape[i]-begin[i]. Output: - output: n-dimensional tensor obtained by slicing. The TensorType, shape, and size of the output are the same as those of the input. |

| OH_NN_OPS_SOFTMAX | Applies the softmax operation to the input tensor. Input: - input: n-dimensional input tensor. Parameters: - axis: dimension in which the softmax operation is performed. The value is of the int64 type. It is an integer in the range [-n, n). Output: - output: result of the softmax operation. It is an n-dimensional tensor with the same data type and shape as input. |

| OH_NN_OPS_SPACE_TO_BATCH_ND | Divides a 4D tensor into small blocks and combines these blocks in the original batch. The number of blocks is blockShape[0] multiplied by blockShape[1]. Input: - input: 4D tensor. Parameters: - blockShape: a pair of integers. Each of them is greater than or equal to 1. - paddings: a pair of arrays. Each of them consists of two integers. The four integers that form paddings must be greater than or equal to 0. paddings[0][0] and paddings[0][1] specify the number of paddings in the third dimension, and paddings[1][0] and paddings[1][1] specify the number of paddings in the fourth dimension. Output: - output: 4D tensor with the same data type as input. The shape is determined by input, blockShape, and paddings. Assume that the input shape is [n,c,h,w], then: output.shape[0] = n * blockShape[0] * blockShape[1] output.shape[1] = c output.shape[2] = (h + paddings[0][0] + paddings[0][1]) / blockShape[0] output.shape[3] = (w + paddings[1][0] + paddings[1][1]) / blockShape[1]. Note that (h + paddings[0][0] + paddings[0][1]) and (w + paddings[1][0] + paddings[1][1]) must be exactly divisible by (h + paddings[0][0] + paddings[0][1]) and (w + paddings[1][0] + paddings[1][1]). |

| OH_NN_OPS_SPLIT | Splits the input into multiple tensors along the axis dimension. The number of tensors is specified by outputNum. Input: - input: n-dimensional tensor. Parameters: - outputNum: number of output tensors. The data type is long int. - size_splits: size of each tensor split from the input. The value is a 1D tensor of the int type. If size_splits is empty, the input will be evenly split into tensors of the same size. In this case, input.shape[axis] can be exactly divisible by outputNum. If size_splits is not empty, the sum of all its elements must be equal to input.shape[axis]. - axis: splitting dimension of the int type. Output: - outputs: array of n-dimensional tensors, with the same data type and dimensions. The data type of each tensor is the same as that of input. |

| OH_NN_OPS_SQRT | Calculates the square root of a tensor. Input: - input: n-dimensional tensor. Output: - output: square root of the input. It is an n-dimensional tensor with the same data type and shape as input. |

| OH_NN_OPS_SQUARED_DIFFERENCE | Calculates the square of the difference between two tensors. The SquaredDifference operator supports tensor and tensor subtraction. If two tensors have different TensorTypes, the Sub operator converts the low-precision tensor to a high-precision one. If two tensors have different shapes, the two tensors can be extended to tensors with the same shape through broadcast. Input: - input1: minuend, which is a tensor of the NN_FLOAT16, NN_FLOAT32, NN_INT32, or NN_BOOL type. - input2: subtrahend, which is a tensor of the NN_FLOAT16, NN_FLOAT32, NN_INT32, or NN_BOOL type. Output: - output: square of the difference between two inputs. The output shape is determined by input1 and input2. If they have the same shape, the output tensor has the same shape as them. If they have different shapes, perform the broadcast operation on input1 and input2 and perform subtraction. TensorType of the output is the same as that of the input tensor with higher precision. |

| OH_NN_OPS_SQUEEZE | Removes the dimension with a length of 1 from the specified axis. The int8 quantization input is supported. Assume that the input shape is [2, 1, 1, 2, 2] and axis is [0,1], the output shape is [2, 1, 2, 2], which means the dimension whose length is 0 between dimension 0 and dimension 1 is removed. Input: - input: n-dimensional tensor. Parameters: - axis: dimension to be removed. The value is of int64_t type and can be an integer in the range [-n, n) or an array. Output: - output: output tensor. |

| OH_NN_OPS_STACK | Stacks multiple tensors along the specified axis. If each tensor has n dimensions before stacking, the output tensor will have n+1 dimensions. Input: - input: input for stacking, which can contain multiple n-dimensional tensors. Each of them must have the same shape and type. Parameters: - axis: dimension for tensor stacking, which is an integer. The value range is [-(n+1),(n+1)), which means a negative number is allowed. Output: - output: stacking result of the input along the axis dimension. The value is an n+1-dimensional tensor and has the same TensorType as the input. |

| OH_NN_OPS_STRIDED_SLICE | Slices a tensor with the specified stride. Input: - input: n-dimensional input tensor. - begin: start of slicing, which is a 1D tensor. The length of begin is n. begin[i] specifies the start of slicing in the ith dimension. - end: end of slicing, which is a 1D tensor. The length of end is n. end[i] specifies the end of slicing in the ith dimension. - strides: slicing stride, which is a 1D tensor. The length of strides is n. strides[i] specifies the stride at which the tensor is sliced in the ith dimension. Parameters: - beginMask: an integer used to mask begin. beginMask is represented in binary code. In case of binary(beginMask)[i]==1, for the ith dimension, elements are sliced from the first element at strides[i] until the end[i]-1 element. - endMask: an integer used to mask end. endMask is represented in binary code. In case of binary(endMask)[i]==1, elements are sliced from the element at the begin[i] position in the ith dimension until the tensor boundary at strides[i]. - ellipsisMask: integer used to mask begin and end. ellipsisMask is represented in binary code. In case of binary(ellipsisMask)[i]==1, elements are sliced from the first element at strides[i] in the ith dimension until the tensor boundary. Only one bit of binary(ellipsisMask) can be a non-zero value. - newAxisMask: new dimension, which is an integer. newAxisMask is represented in binary code. In case of binary(newAxisMask)[i]==1, a new dimension whose length is 1 is inserted into the ith dimension. - shrinkAxisMask: shrinking dimension, which is an integer. shrinkAxisMask is represented in binary code. In the case of binary(shrinkAxisMask)[i]==1, all elements in the ith dimension will be discarded, and the length of the ith dimension is shrunk to 1. Output: - A tensor, with the same data type as input. The number of dimensions of the output tensor is rank(input[0])+1. |

| OH_NN_OPS_SUB | Calculates the difference between two tensors. Input: - input1: minuend, which is a tensor. - input2: subtrahend, which is a tensor. Parameters: - activationType: integer constant contained in FuseType. The specified activation function is called before output. Output: - output: difference between the two tensors. The shape of the output is determined by input1 and input2. When the shapes of input1 and input2 are the same, the shape of the output is the same as that of input1 and input2. If the shapes of input1 and input2 are different, the output is obtained after the broadcast operation is performed on input1 or input2. TensorType of the output is the same as that of the input tensor with higher precision. |

| OH_NN_OPS_TANH | Computes hyperbolic tangent of the input tensor. Input: - input: n-dimensional tensor. Output: - output: hyperbolic tangent of the input. The TensorType and tensor shape are the same as those of the input. |

| OH_NN_OPS_TILE | Copies a tensor for the specified number of times. Input: - input: n-dimensional tensor. - multiples: number of times that the input tensor is copied in each dimension. The value is a 1D tensor. The length m is not less than the number of dimensions, that is, n. Output: - An m-dimensional tensor whose TensorType is the same as that of the input. If input and multiples have the same length, input and output have the same number of dimensions. If the length of multiples is greater than n, 1 is used to fill the input dimension, and then the input is copied in each dimension the specified times to obtain the m-dimensional tensor. |

| OH_NN_OPS_TRANSPOSE | Transposes data of input 0 based on permutation. Input: - input: n-dimensional tensor to be transposed. - permutation: The value is a 1D tensor whose length is the same as the number of dimensions of input 0. Output: - output: n-dimensional tensor. TensorType of output 0 is the same as that of input 0, and the output shape is determined by the shape and permutation of input 0. |

| OH_NN_OPS_REDUCE_MEAN | Calculates the average value in the specified dimension. If keepDims is set to false, the number of dimensions is reduced for the input; if keepDims is set to true, the number of dimensions is retained. Input: - input: n-dimensional input tensor, where n is less than 8. - axis: dimension used to calculate the average value. The value is a 1D tensor. The value range of each element in axis is [–n, n). Parameters: - keepDims: whether to retain the dimension. The value is a Boolean value. Output: - output: m-dimensional output tensor whose data type is the same as that of the input. If keepDims is false, m==n. If keepDims is true, m<n. |

| OH_NN_OPS_RESIZE_BILINEAR | The Bilinear method is used to deform the input based on the given parameters. Input: - input: 4D input tensor. Each element in the input cannot be less than 0. The input layout must be [batchSize, height, width, channels]. Parameters: - newHeight: resized height of the 4D tensor. - newWidth: resized width of the 4D tensor. - preserveAspectRatio: whether to maintain the height/width ratio of input after resizing. - coordinateTransformMode: coordinate transformation method used by the resize operation. The value is an int32 integer. Currently, the following APIs are supported: - excludeOutside: an int64 floating point number. When its value is 1, the sampling weight of the part that exceeds the boundary of input is set to 0, and other weights are normalized. Output: - output: n-dimensional tensor, with the same shape and data type as input. |

| OH_NN_OPS_RSQRT | Calculates the reciprocal of the square root of a tensor. Input: - input: n-dimensional tensor, where n is less than 8. Each element of the tensor cannot be less than 0. Output: - output: n-dimensional tensor, with the same shape and data type as input. |

| OH_NN_OPS_RESHAPE | Reshapes a tensor. Input: - input: n-dimensional input tensor. - InputShape: shape of the output tensor. The value is a 1D constant tensor. Output: - output: tensor whose data type is the same as that of input and shape is determined by InputShape. |

| OH_NN_OPS_PRELU | Calculates the PReLU activation value of input and weight. Input: - input: n-dimensional tensor. If n is greater than or equal to 2, inputX must be [BatchSize, ..., Channels]. The second dimension is the number of channels. - weight: 1D tensor. The length of weight must be 1 or equal to the number of channels. If the length of weight is 1, all channels share the same weight. If the length of weight is equal to the number of channels, each channel exclusively has a weight. If n is less than 2 for inputX, the weight length must be 1. Output: - output: PReLU activation value of input, with the same shape and data type as inputX. |

| OH_NN_OPS_RELU | Calculates the Relu activation value of input. Input: - input: n-dimensional input tensor. Output: - output: n-dimensional tensor, with the same data type and shape as the input tensor. |

| OH_NN_OPS_RELU6 | Calculates the Relu6 activation value of the input, that is, calculate min(max(x, 0), 6) for each element x in the input. Input: - input: n-dimensional input tensor. Output: - output: n-dimensional Relu6 tensor, with the same data type and shape as the input tensor. |

| OH_NN_OPS_LAYER_NORM | Applies layer normalization for a tensor from the specified axis. Input: - input: n-dimensional input tensor. - gamma: m-dimensional tensor. The dimensions of gamma must be the same as the shape of the part of the input tensor to normalize. - beta: m-dimensional tensor with the same shape as gamma. Parameters: - beginAxis is an NN_INT32 scalar that specifies the axis from which normalization starts. The value range is [1, rank(input)). - epsilon is a scalar of NN_FLOAT32. It is a tiny amount in the normalization formula. The common value is 1e-7. Output: - output: n-dimensional tensor, with the same data type and shape as the input tensor. |

| OH_NN_OPS_REDUCE_PROD | Calculates the accumulated value for a tensor along the specified dimension. Input: - input: n-dimensional input tensor, where n is less than 8. - axis: dimension used to calculate the product. The value is a 1D tensor. The value range of each element in axis is [–n, n). Parameters: - keepDims: whether to retain the dimension. The value is a Boolean value. When its value is true, the number of output dimensions is the same as that of the input. When its value is false, the number of output dimensions is reduced. Output: - output: m-dimensional output tensor whose data type is the same as that of the input. If keepDims is false, m==n. If keepDims is true, m<n. |

| OH_NN_OPS_REDUCE_ALL | Operates the logical OR in the specified dimension. If keepDims is set to false, the number of dimensions is reduced for the input; if keepDims is set to true, the number of dimensions is retained. Input: - An n-dimensional input tensor, where n is less than 8. - A 1D tensor specifying the dimension used to operate the logical OR. The value range of each element in axis is [–n, n). Parameters: - keepDims: whether to retain the dimension. The value is a Boolean value. Output: - output: m-dimensional output tensor whose data type is the same as that of the input. If keepDims is false, m==n. If keepDims is true, m<n. |

| OH_NN_OPS_QUANT_DTYPE_CAST | Converts the data type. Input: - input: n-dimensional tensor. Parameters: - src_t: data type of the input. - dst_t: data type of the output. Output: - output: n-dimensional tensor. The data type is determined by input2. The output shape is the same as the input shape. |

| OH_NN_OPS_TOP_K | Obtains the values and indices of the largest k entries in the last dimension. Input: - input: n-dimensional tensor. - input k: first k records of data and their indices. Parameters: - sorted: order of sorting. The value true means descending and false means ascending. Output: - output0: largest k elements in each slice of the last dimension. - output1: index of the value in the last dimension of the input. |

| OH_NN_OPS_ARG_MAX | Returns the index of the maximum tensor value across axes. Input: - input: n-dimensional tensor (N, *), where * means any number of additional dimensions. Parameters: - axis: dimension for calculating the index of the maximum. - keep_dims: whether to maintain the input tensor dimension. The value is a Boolean value. Output: - output: index of the maximum input tensor on the axis. The value is a tensor. |

| OH_NN_OPS_UNSQUEEZE | Adds a dimension based on the value of axis. Input: - input: n-dimensional tensor. Parameters: - axis: dimension to add. The value of axis can be an integer or an array of integers. The value range of the integer is [-n, n). Output: - output: output tensor. |

| OH_NN_OPS_GELU | Activates the Gaussian error linear unit. The int quantization input is not supported. output=0.5input(1+tanh(input/2)) Input: - An n-dimensional input tensor. Output: - output: n-dimensional tensor, with the same data type and shape as the input tensor. |

OH_NN_PerformanceMode

enum OH_NN_PerformanceMode

Description

Performance modes of the device.

Since: 9

| Value | Description |

|---|---|

| OH_NN_PERFORMANCE_NONE | No performance mode preference. |

| OH_NN_PERFORMANCE_LOW | Low power consumption mode. |

| OH_NN_PERFORMANCE_MEDIUM | Medium performance mode. |

| OH_NN_PERFORMANCE_HIGH | High performance mode. |

| OH_NN_PERFORMANCE_EXTREME | Ultimate performance mode. |

OH_NN_Priority

enum OH_NN_Priority

Description

Priorities of a model inference task.

Since: 9

| Value | Description |

|---|---|

| OH_NN_PRIORITY_NONE | No priority preference. |

| OH_NN_PRIORITY_LOW | Low priority. |

| OH_NN_PRIORITY_MEDIUM | Medium priority. |

| OH_NN_PRIORITY_HIGH | High priority. |

OH_NN_ReturnCode

enum OH_NN_ReturnCode

Description

Error codes for NNRt.

Since: 9

| Value | Description |

|---|---|

| OH_NN_SUCCESS | The operation is successful. |

| OH_NN_FAILED | Operation failed. |

| OH_NN_INVALID_PARAMETER | Invalid parameter. |

| OH_NN_MEMORY_ERROR | Memory-related error, for example, insufficient memory, memory data copy failure, or memory application failure. |

| OH_NN_OPERATION_FORBIDDEN | Invalid operation. |

| OH_NN_NULL_PTR | Null pointer. |

| OH_NN_INVALID_FILE | Invalid file. |

| OH_NN_UNAVALIDABLE_DEVICE (deprecated) | Hardware error, for example, HDL service crash. Deprecated: This API is deprecated since API version 11. Substitute: OH_NN_UNAVAILABLE_DEVICE is recommended. |

| OH_NN_INVALID_PATH | Invalid path. |

| OH_NN_TIMEOUT11+ | Execution timed out. This API is supported since API version 11. |

| OH_NN_UNSUPPORTED11+ | Not supported. This API is supported since API version 11. |

| OH_NN_CONNECTION_EXCEPTION11+ | Connection error. This API is supported since API version 11. |

| OH_NN_SAVE_CACHE_EXCEPTION11+ | Failed to save the cache. This API is supported since API version 11. |

| OH_NN_DYNAMIC_SHAPE11+ | Dynamic shape. This API is supported since API version 11. |

| OH_NN_UNAVAILABLE_DEVICE11+ | Hardware error, for example, HDL service crash. This API is supported since API version 11. |

OH_NN_TensorType

enum OH_NN_TensorType

Description

Defines tensor types.

Tensors are usually used to set the input, output, and operator parameters of a model. When a tensor is used as the input or output of a model (or operator), set the tensor type to OH_NN_TENSOR. When the tensor is used as an operator parameter, select an enumerated value other than OH_NN_TENSOR as the tensor type. Assume that pad of the OH_NN_OPS_CONV2D operator is being set. You need to set the type attribute of the OH_NN_Tensor instance to OH_NN_CONV2D_PAD. The settings of other operator parameters are similar. The enumerated values are named in the format OH_NN_{Operator name}_{Attribute name}.

Since: 9

| Value | Description |

|---|---|

| OH_NN_TENSOR | Used when the tensor is used as the input or output of a model (or operator). |

| OH_NN_ADD_ACTIVATIONTYPE | Used when the tensor is used as the activationType parameter of the Add operator. |

| OH_NN_AVG_POOL_KERNEL_SIZE | Used when the tensor is used as the kernel_size parameter of the AvgPool operator. |

| OH_NN_AVG_POOL_STRIDE | Used when the tensor is used as the stride parameter of the AvgPool operator. |

| OH_NN_AVG_POOL_PAD_MODE | Used when the tensor is used as the pad_mode parameter of the AvgPool operator. |

| OH_NN_AVG_POOL_PAD | Used when the tensor is used as the pad parameter of the AvgPool operator. |

| OH_NN_AVG_POOL_ACTIVATION_TYPE | Used when the tensor is used as the activation_type parameter of the AvgPool operator. |

| OH_NN_BATCH_NORM_EPSILON | Used when the tensor is used as the eosilon parameter of the BatchNorm operator. |

| OH_NN_BATCH_TO_SPACE_ND_BLOCKSIZE | Used when the tensor is used as the blockSize parameter of the BatchToSpaceND operator. |

| OH_NN_BATCH_TO_SPACE_ND_CROPS | Used when the tensor is used as the crops parameter of the BatchToSpaceND operator. |

| OH_NN_CONCAT_AXIS | Used when the tensor is used as the axis parameter of the Concat operator. |

| OH_NN_CONV2D_STRIDES | Used when the tensor is used as the strides parameter of the Conv2D operator. |

| OH_NN_CONV2D_PAD | Used when the tensor is used as the pad parameter of the Conv2D operator. |

| OH_NN_CONV2D_DILATION | Used when the tensor is used as the dilation parameter of the Conv2D operator. |

| OH_NN_CONV2D_PAD_MODE | Used when the tensor is used as the padMode parameter of the Conv2D operator. |

| OH_NN_CONV2D_ACTIVATION_TYPE | Used when the tensor is used as the activationType parameter of the Conv2D operator. |

| OH_NN_CONV2D_GROUP | Used when the tensor is used as the group parameter of the Conv2D operator. |

| OH_NN_CONV2D_TRANSPOSE_STRIDES | Used when the tensor is used as the strides parameter of the Conv2DTranspose operator. |

| OH_NN_CONV2D_TRANSPOSE_PAD | Used when the tensor is used as the pad parameter of the Conv2DTranspose operator. |

| OH_NN_CONV2D_TRANSPOSE_DILATION | Used when the tensor is used as the dilation parameter of the Conv2DTranspose operator. |

| OH_NN_CONV2D_TRANSPOSE_OUTPUT_PADDINGS | Used when the tensor is used as the outputPaddings parameter of the Conv2DTranspose operator. |

| OH_NN_CONV2D_TRANSPOSE_PAD_MODE | Used when the tensor is used as the padMode parameter of the Conv2DTranspose operator. |

| OH_NN_CONV2D_TRANSPOSE_ACTIVATION_TYPE | Used when the tensor is used as the activationType parameter of the Conv2DTranspose operator. |

| OH_NN_CONV2D_TRANSPOSE_GROUP | Used when the tensor is used as the group parameter of the Conv2DTranspose operator. |

| OH_NN_DEPTHWISE_CONV2D_NATIVE_STRIDES | Used when the tensor is used as the strides parameter of the DepthwiseConv2dNative operator. |

| OH_NN_DEPTHWISE_CONV2D_NATIVE_PAD | Used when the tensor is used as the pad parameter of the DepthwiseConv2dNative operator. |

| OH_NN_DEPTHWISE_CONV2D_NATIVE_DILATION | Used when the tensor is used as the dilation parameter of the DepthwiseConv2dNative operator. |

| OH_NN_DEPTHWISE_CONV2D_NATIVE_PAD_MODE | Used when the tensor is used as the padMode parameter of the DepthwiseConv2dNative operator. |

| OH_NN_DEPTHWISE_CONV2D_NATIVE_ACTIVATION_TYPE | Used when the tensor is used as the activationType parameter of the DepthwiseConv2dNative operator. |

| OH_NN_DIV_ACTIVATIONTYPE | Used when the tensor is used as the activationType parameter of the Div operator. |

| OH_NN_ELTWISE_MODE | Used when the tensor is used as the mode parameter of the Eltwise operator. |

| OH_NN_FULL_CONNECTION_AXIS | Used when the tensor is used as the axis parameter of the FullConnection operator. |

| OH_NN_FULL_CONNECTION_ACTIVATIONTYPE | Used when the tensor is used as the activationType parameter of the FullConnection operator. |

| OH_NN_MATMUL_TRANSPOSE_A | Used when the tensor is used as the transposeA parameter of the Matmul operator. |

| OH_NN_MATMUL_TRANSPOSE_B | Used when the tensor is used as the transposeB parameter of the Matmul operator. |

| OH_NN_MATMUL_ACTIVATION_TYPE | Used when the tensor is used as the activationType parameter of the Matmul operator. |

| OH_NN_MAX_POOL_KERNEL_SIZE | Used when the tensor is used as the kernel_size parameter of the MaxPool operator. |

| OH_NN_MAX_POOL_STRIDE | Used when the tensor is used as the stride parameter of the MaxPool operator. |

| OH_NN_MAX_POOL_PAD_MODE | Used when the tensor is used as the pad_mode parameter of the MaxPool operator. |

| OH_NN_MAX_POOL_PAD | Used when the tensor is used as the pad parameter of the MaxPool operator. |

| OH_NN_MAX_POOL_ACTIVATION_TYPE | Used when the tensor is used as the activation_type parameter of the MaxPool operator. |

| OH_NN_MUL_ACTIVATION_TYPE | Used when the tensor is used as the activationType parameter of the Mul operator. |

| OH_NN_ONE_HOT_AXIS | Used when the tensor is used as the axis parameter of the OneHot operator. |

| OH_NN_PAD_CONSTANT_VALUE | Used when the tensor is used as the constant_value parameter of the Pad operator. |

| OH_NN_SCALE_ACTIVATIONTYPE | Used when the tensor is used as the activationType parameter of the Scale operator. |

| OH_NN_SCALE_AXIS | Used when the tensor is used as the axis parameter of the Scale operator. |

| OH_NN_SOFTMAX_AXIS | Used when the tensor is used as the axis parameter of the Softmax operator. |

| OH_NN_SPACE_TO_BATCH_ND_BLOCK_SHAPE | Used when the tensor is used as the BlockShape parameter of the SpaceToBatchND operator. |

| OH_NN_SPACE_TO_BATCH_ND_PADDINGS | Used when the tensor is used as the Paddings parameter of the SpaceToBatchND operator. |

| OH_NN_SPLIT_AXIS | Used when the tensor is used as the Axis parameter of the Split operator. |

| OH_NN_SPLIT_OUTPUT_NUM | Used when the tensor is used as the OutputNum parameter of the Split operator. |

| OH_NN_SPLIT_SIZE_SPLITS | Used when the tensor is used as the SizeSplits parameter of the Split operator. |

| OH_NN_SQUEEZE_AXIS | Used when the tensor is used as the Axis parameter of the Squeeze operator. |

| OH_NN_STACK_AXIS | Used when the tensor is used as the Axis parameter of the Stack operator. |

| OH_NN_STRIDED_SLICE_BEGIN_MASK | Used when the tensor is used as the BeginMask parameter of the StridedSlice operator. |

| OH_NN_STRIDED_SLICE_END_MASK | Used when the tensor is used as the EndMask parameter of the StridedSlice operator. |

| OH_NN_STRIDED_SLICE_ELLIPSIS_MASK | Used when the tensor is used as the EllipsisMask parameter of the StridedSlice operator. |

| OH_NN_STRIDED_SLICE_NEW_AXIS_MASK | Used when the tensor is used as the NewAxisMask parameter of the StridedSlice operator. |

| OH_NN_STRIDED_SLICE_SHRINK_AXIS_MASK | Used when the tensor is used as the ShrinkAxisMask parameter of the StridedSlice operator. |

| OH_NN_SUB_ACTIVATIONTYPE | Used when the tensor is used as the ActivationType parameter of the Sub operator. |

| OH_NN_REDUCE_MEAN_KEEP_DIMS | Used when the tensor is used as the keep_dims parameter of the ReduceMean operator. |